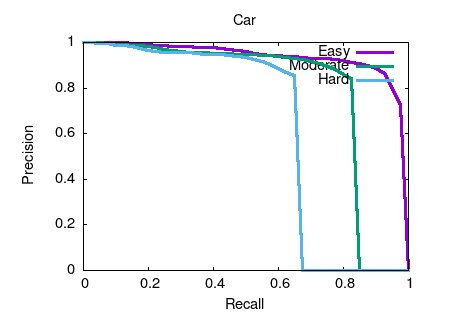

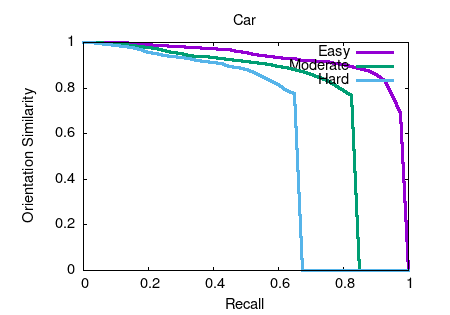

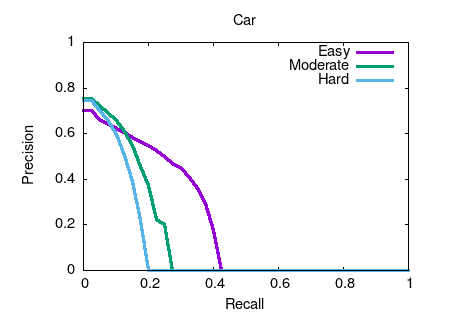

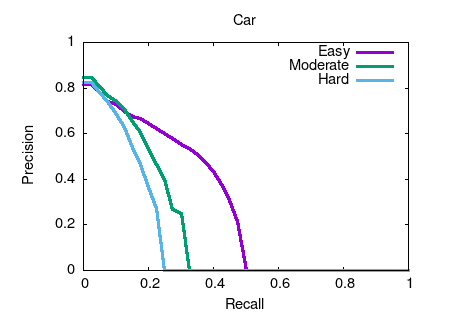

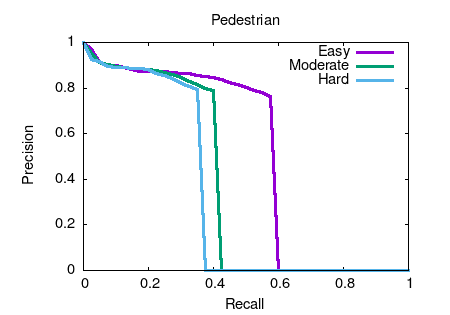

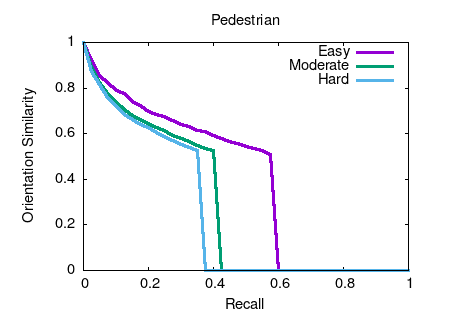

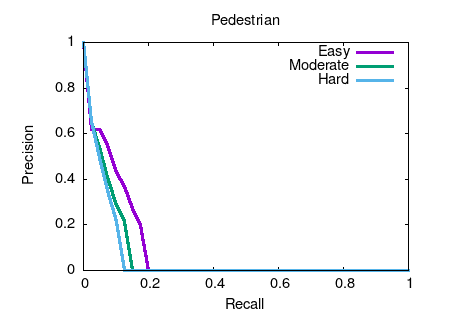

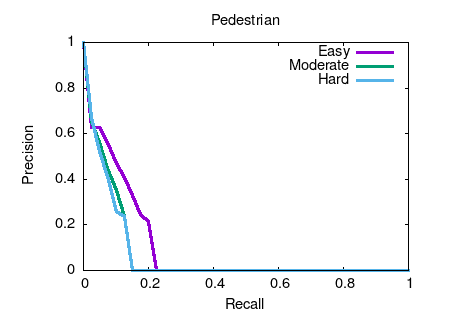

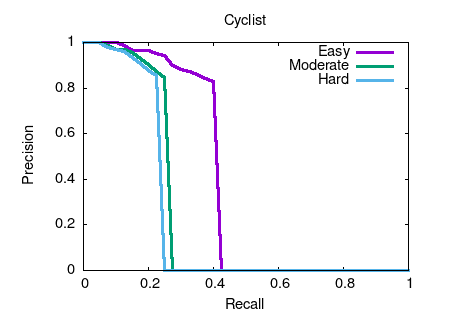

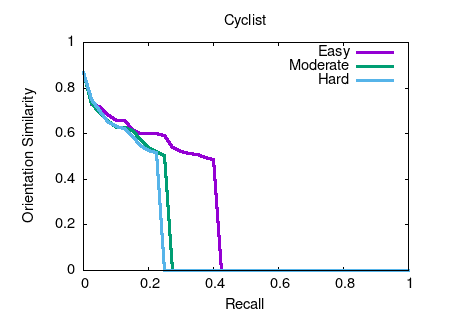

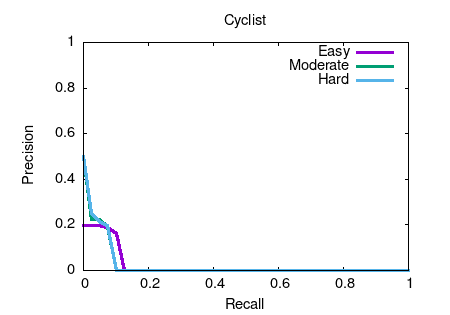

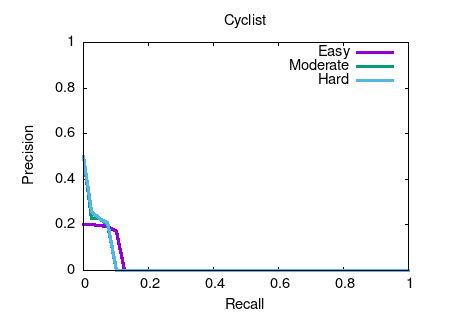

Object detection and orientation estimation results. Results for object detection are given in terms of average precision (AP) and results for joint object detection and orientation estimation are provided in terms of average orientation similarity (AOS).

| Benchmark |

Easy |

Moderate |

Hard |

| Car (Detection) |

92.33 % |

78.21 % |

61.58 % |

| Car (Orientation) |

91.51 % |

75.95 % |

59.55 % |

| Car (3D Detection) |

20.28 % |

13.12 % |

9.56 % |

| Car (Bird's Eye View) |

27.39 % |

17.60 % |

13.25 % |

| Pedestrian (Detection) |

49.26 % |

34.74 % |

30.37 % |

| Pedestrian (Orientation) |

38.13 % |

26.28 % |

22.91 % |

| Pedestrian (3D Detection) |

7.62 % |

5.23 % |

4.28 % |

| Pedestrian (Bird's Eye View) |

8.69 % |

5.62 % |

5.25 % |

| Cyclist (Detection) |

37.41 % |

23.59 % |

21.20 % |

| Cyclist (Orientation) |

23.82 % |

15.24 % |

13.84 % |

| Cyclist (3D Detection) |

1.87 % |

1.60 % |

1.66 % |

| Cyclist (Bird's Eye View) |

1.91 % |

1.65 % |

1.75 % |

This table as LaTeX

|